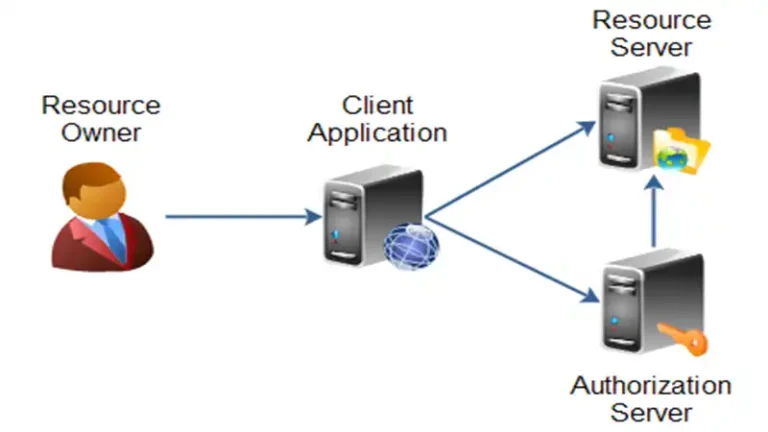

How to Implement Authorization Code Flow With OWIN | Explained

Implementing the Authorization Code Flow with OWIN (Open Web Interface for . NET) is a crucial step in ensuring secure authentication and authorization within web applications. This flow is widely used to grant permissions and access tokens to users and applications securely. By following a few key steps, developers can integrate this flow seamlessly into…

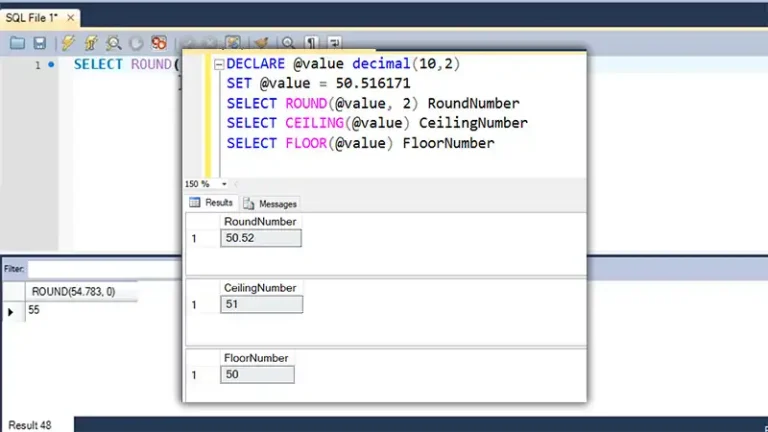

![How To Use Floor In SQL Query [A 7-steps Solution]](https://www.iheavy.com/wp-content/uploads/2023/12/How-To-Use-Floor-In-SQL-Query-768x432.webp)