Does AWS Have a Dirty Little Secret?

I was recently talking with a colleague of mine about where AWS is today. Obviously, these companies are migrating to EC2 & the cloud rapidly. The growth rates are staggering.

The question was…

“What’s good and bad with Amazon today?”

It’s an interesting question. I think there are some dirty little secrets here, but also some very surprising bright spots. This is my take.

Dirty Little Secrets of AWS

These all are from my personal perspective:

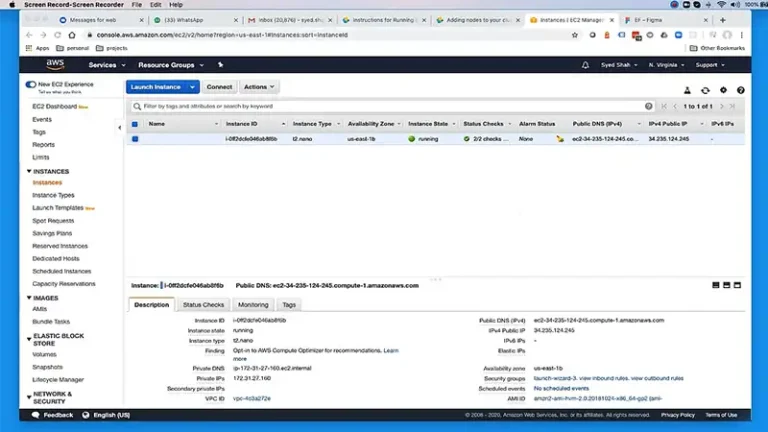

1. VPC Is Not Well Understood (FAIL)

This is the biggest one in my mind. Amazon’s security model is all new to traditional ops folks. Many customers I see deploy in “classic EC2”. Other’s deploy haphazerdly in their own VPC, without a clear plan.

The best practices are to have one or more VPCs, with private & public subnet. Put databases in private, and webservers in public. Then create a jump box in the public subnet, and funnel all ssh connections through there, allow any source IP, use users for authentication & auditing (only on this box), then use google-authenticator for 2factor at the command line. It also provides an easy way to decommission accounts, and lock out users who leave the company.

However, most customers have done little of this, or a mixture but not all of it. So, GETTING TO BEST PRACTICES around vpc, would mean deploying a vpc as described, then moving each and every one of your boxes & services over there. Imagine the risk to production services. Imagine the chances of error, even if you’re using Chef or your own standardized AMIs.

Also: Are we fast approaching cloud-mageddon?

2. Feature Fatigue (FAIL)

Another problem is a sort of “paradox of choice”. That is that Amazon is releasing so many new offerings so quickly, few engineers know it all. So, you find a lot of shops implementing things wrong because they didn’t understand a feature. In other words, AWS already solved the problem.

OpenRoad comes to mind. They’ve got media files on the filesystem, when S3 is plainly Amazon’s purpose-built service for this.

Is AWS too complex for small dev teams & startups?

Related: Does Amazon eat its own dogfood? Apparently yes!

3. Required Redundancy & Automation (FAIL)

The model here is what Netflix has done with ChaosMonkey. They literally knock machines offline to test their setup. The problem is detected, and new hardware is brought online automatically. Deploying across AZs is another example. As Amazon says, we give you the tools, it’s up to you to implement the resiliency.

But few firms do this. They’re deployed on Amazon as if it’s a traditional hosting platform. So, they’re at risk in various ways. Of Amazon outages. Of hardware problems under the VMs. Of EBS network issues, of localized outages, etc.

Read: Is Amazon too big to fail?

4. Lambda (WIN)

I went to the serverless conference a week ago. It was exciting to see what is happening. It is truly the *bleeding edge* of the cloud. IBM & Azure & Google all have a serverless offering now.

The potential here is huge. Eliminating *ALL* of the server management headaches, from packages to config management & scaling, hiding all of that could have a huge upside. What’s more, it takes the on-demand model even further. You have no compute running idle until you hit an endpoint. Cost savings could be huge. Wonder if it has the potential to cannibalize Amazon’s own EC2 … we’ll see.

Charity Majors wrote a very good critical piece – WTF is Operations? #serverless

Patrick Dubois

From serverless to Service Full – How the mindset of devops is evolving from Patrick Debois

Also: Is the difference between dev & ops a four-letter word?

5. Redshift (WIN)

Seems like *everybody* is deploying a data warehouse on Redshift these days. It’s no wonder, because they already have their transactional database, their web backend on RDS of some kind. So, it makes sense that Amazon would build an offering for reporting.

I’ve heard customers rave about reports that took 10 hours on MySQL to run in under a minute on Redshift. It’s not surprising because MySQL wasn’t built for the size of servers it’s being deployed on today. So, it doesn’t make good use of all that memory. Even with SSD drives, query plans can execute badly.

Conclusion

AWS has some dirty little secrets, but also some bright spots. The biggest issue is that many customers don’t fully understand Amazon’s security model, particularly with VPC. Feature fatigue is also a problem, with so many new offerings being released quickly. Required redundancy and automation are often neglected, leaving firms at risk. However, Lambda and Redshift are big wins for AWS. Lambda eliminates server management headaches, while Redshift offers fast reporting capabilities.