Thinking Deeply About Amazon Cloud & Infrastructure Code

If you’re building anything in the public cloud these days, you’re probably using some automation. There are a lot of ways to reach the goal posts and a lot of tools to choose from.

In my case I’ve put Terraform to use over and over again. I’m built vpcs, public & private subnets, and bastion boxes for mobile apps for mental health & fitness, building security, and two factor authentication apps.

I’ve chosen Terraform because it has a vibrant & growing community, the usability is miles ahead of CloudFormation, and it can work in a multi-cloud environment.

But this article isn’t about choice of tools. I’m curious about this one question:

“What architectural considerations should I keep in mind as I build my infrastructure code?”

Here are my thoughts on that one…

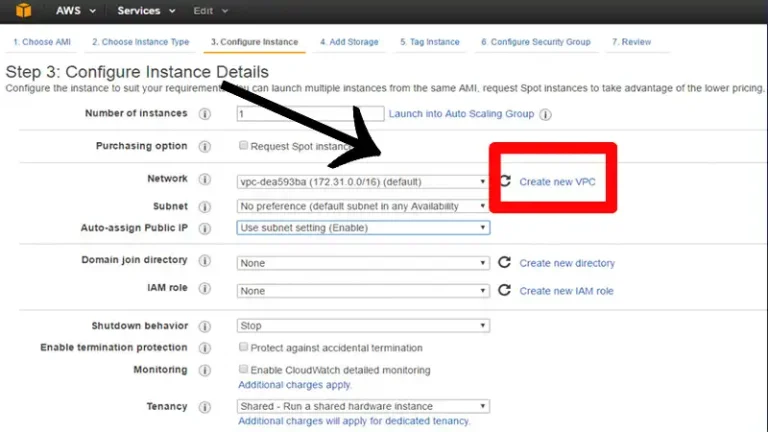

The VPC Is Your Fundamental Container

Everything you build sits inside of a VPC. Your entire stack references back to those variables, including vpc-id, private and public subnet IDs.

Here’s an example where you can get into trouble. Digging through some infra code, reviewing with a new devops hire, we were going through everything with a fine toothed comb. We found that the RDS instance was being deployed in PUBLIC subnet, instead of private.

Alerted to the problem, we first checked to see whether it was accessible from the internet at large. It wasn’t, because we had not exposed a public facing IP. That said it wasn’t the most secure setup and I wanted to fix it.

I made some changes to the Terraform code, to update the subnet to private, and tried “$ terraform apply”. Then I got all sorts of errors. Try as I might, this update would not work.

Sadly, the long term solution was to destroy the entire stack, and rebuild with RDS in the right place. Lesson learned.

Related: When you have to take the fall

Why I Discovered A Shared Or Utility Vpc Was So Useful

o story of placing ELK inside an application vpc

Related: Before you do infrastructure as code, consider your workflow

Think Carefully About Domains

As you build your application, you’ll likely need a route 53 zone associated with it. And you’ll want a CNAME in front of your load balancer, so it’s easy for customers to hit your endpoint.

1. rebuilding stack means new zone & new nameservers

If your registrar is elsewhere, you’ll need to update nameservers each time you destory & build the zone. This happens even if you host the domain at AWS. And it can’t be automated at the moment.

You could also have the zone created *outside* terraform. Then your terraform code would reference and add CNAMEs to that zone by using it’s ARN as reference. This is another possible pattern.

The pattern I prefer is to have each vpc & stack have their own unique top level domain. That way terraform can cleanly create and destroy the whole stack and nothing is comingled.

Related: I tried to do infrastructure as code, it didn’t go as I expected

Enable Easy Create & Destroy

Each time you tear up your work and rebuild, you test the whole process. This is good. Iron out those hiccups before they cause you trouble. After some time, you’ll be able to move your entire application stack, db, ec2 instances, vpc & network resources from one region to another easily & quickly.

After doing this a few times, you’ll start to learn what resources in AWS are region specific. And which ones are global.

Remember, don’t allow any manually created objects or resouces inside your automated ones. If you aren’t strict here, you’ll hit errors when you try to destroy, and then have to troubleshoot those one-by-one.

Related: How to setup an Amazon ECS cluster with Terraform

Automate First

I was building an ELK server setup to centralize our logging infra. Everything worked pretty well. After a time, I added some more S3 buckets for load balancer log ingestion.

Later we hit a problem, where the root volume was filling up. This stopped new logs from appearing. So, we rebuild the ELK server with a 10x larger root volume. As we had used terraform and ansible, the rebuild was easy. And quickly we had are logging system back online.

A week or so later though we had trouble again. It seemed that some of the load balancer log data wasn’t showing up. We spent a day troubleshooting, and eventually found out why. Those S3 buckets weren’t being ingested.

Turns out when we added those, we added them to the config file *directly* on the server, but not in the configuration scripts.

Moral of the story…

“Always update the automation scripts first, and apply those to the server. Don’t work on the server directly.”

Related: Are you getting good at Terraform or wrestling with a bear

Beware Of Account Limits

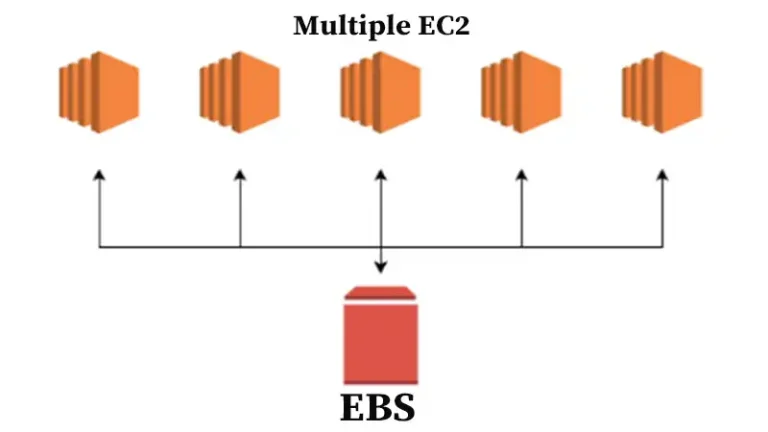

As you’re building your stack in us-east-1, you may later go and try to create another copy of it. Suddenly AWS complains that there are no VPCs left. Or you’ve hit a maximum of 20 EC2 instances.

While these errors may be irritating, you should be glad to have them. With them in place, and errant piece of infra code or application cannot accidentally run up your account and receive a surprise bill.

That said you should be mindful of those limits, and increase them before your application hits a wall.

A few that I’ve run into:

- 20 ec2 instances per region

- 5 Elastic IPs per region

- 5 VPCs per region

What Resources Live on Past A Stack Build/Destroy Cycle

As you build your stack with infrastructure code, you’ll tear it down again often. Each time you do this, you’ll be reminded of one thing. Any data inside there will be gone.

That means for starters don’t store things in the filesystem. Store them in a database. RDS is great for this purpose. Then the question becomes, when I destroy my stack, how can I backup and restore my database. RDS does support this, but if you have more nuanced requirements, you may have to build your own backup & restore.

What about cloudwatch logs? As long as your stack doesn’t destroy those resources, they’ll be kept in perpetuity for you. You may want to further back them up.

What about your load balancer logs? Here you can either create the S3 log bucket *outside* of your infra code. In that case it won’t get cleaned up during a destroy. Alternatively, you can create a meta bucket for load balancer logs, and copy those over regularly. Then when you cleanup your infra, you can do a bucket destroy with the –force option.

Related: Is Amazon too big to fail?

Some Things Remain Manual but Can Be Made Easier

One thing that remains manual in AWS is the SSL certificate creation. You can “request” a new certificate and select DNS validation.

When you do this, incorporate the certificate control cname and certificate control record into the infra code as variables. Then copy/paste these two values from your certificate dashboard.

Assuming your nameservers are pointing to aws, the certificate validation code should spot the above secret control record in DNS. When it does it will conclude that you control the zone, and therefore validate your SSL certificate.

Once it shows VALID on the dashboard, copy the SSL certificate ARN, and pop that into your terraform code. You will add it as part of an SSL listener to your ALB (application load balancer) configuration.

Related: Does AWS have a dirty little secret?

Don’t Just Monitor Metrics

Monitoring is of course important. You’ll want to setup a prometheus server that can do discovery. This allows it to dynamically configure and learn about new servers, so it can monitor those too. It does this by using the AWS API to find out what is there to monitor.

All of this monitoring is crucial, but it applies mainly to server metrics. What is the load average, CPU utilization, memory or disk usage.

What about your log counts? As I describe above, a logstash misconfiguration meant that log records didn’t show up. However, this was only noticed through manual discovery. We want that to be automatically discovered!

Do that by creating checks that count records, and alert to numbers that are.

Conclusion

To conclude, Terraform is a great tool with a growing community and multi-cloud capabilities. Always update automation scripts first to avoid errors, and be aware of account limits. You can also validate other data with checksums, by creating your own custom methods. You’ll need to think intelligently about your application, and what type of checks make sense.